Technical Report: Long prompt Text to Image Analysis with Davidsonian Scene Graph Framework

August 14, 2024

Jin Kim, PhD candidate

DIML Lab, Yonsei University, S. Korea

1. Introduction

1.1 Problem Definition

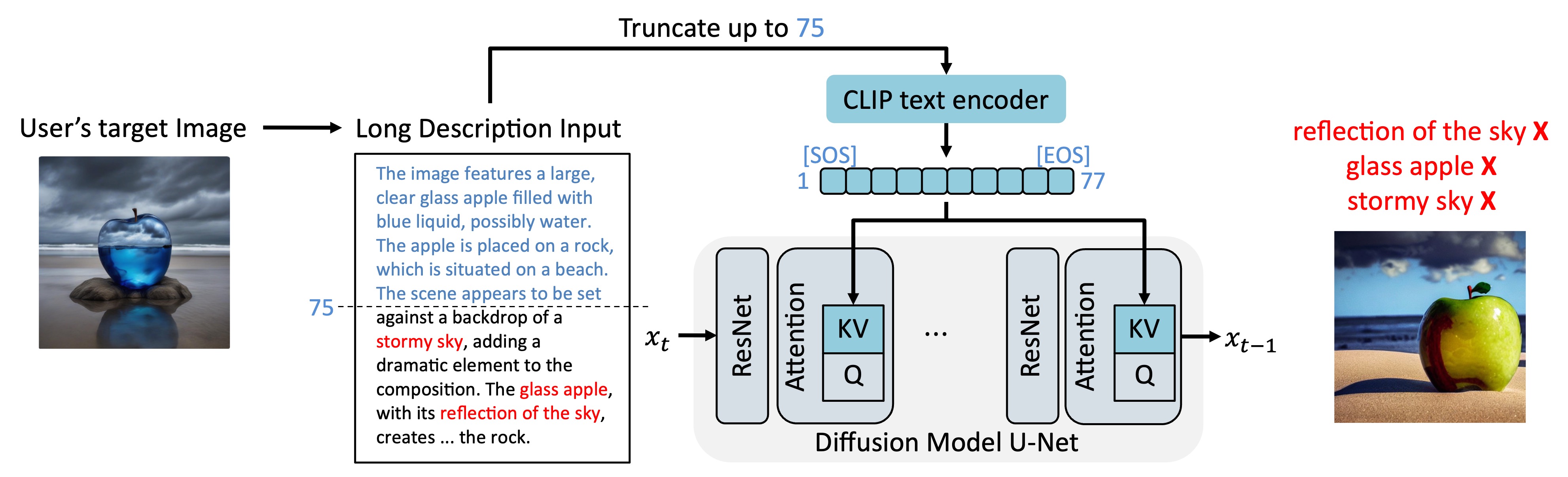

CLIP-based text-to-image diffusion models, which dominate the current T2I landscape, face a fundamental limitation: they can only process text up to 77 tokens due to the CLIP text encoder’s architecture [1]. This constraint, covering approximately 3-4 sentences, severely limits the ability to generate complex scenes requiring detailed descriptions.

This example demonstrates how long prompts get truncated in CLIP-based models, resulting in missing visual elements (glass apple reflection, stormy sky) when descriptions exceed 77 tokens.

1.2 Research Objective

This technical report analyzes various approaches to overcome the 77-token limitation in CLIP-based T2I models and presents experimental findings on their effectiveness using comprehensive evaluation metrics.

2. Technical Approaches

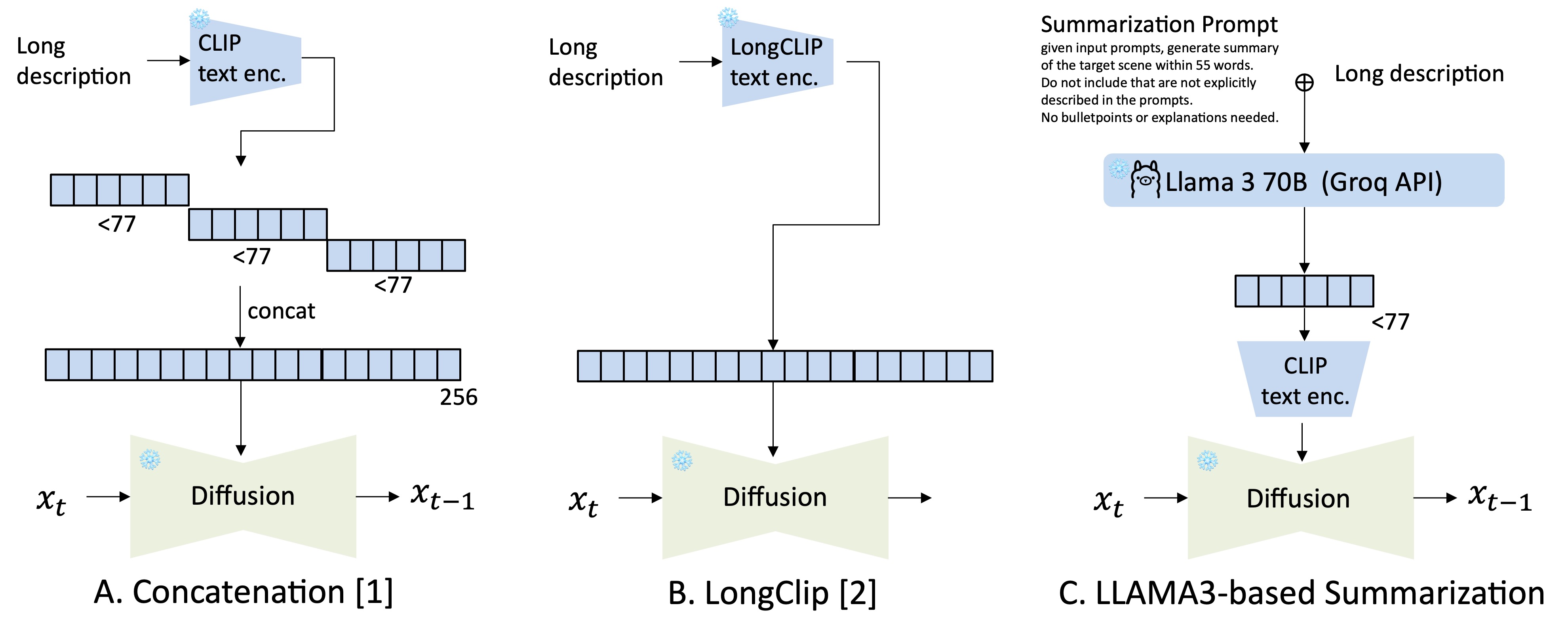

Three main approaches for handling extended text sequences: (A) Separate CLIP concatenation dividing text into chunks, (B) LongCLIP extending positional embeddings to 248 tokens, and (C) LLM-based summarization compressing long descriptions.

2.1 Tokenization-based Methods

Separate CLIP Concatenation

- Divides long text into chunks (30/40/50/77 tokens)

- Processes each chunk through CLIP encoder independently

- Concatenates resulting embeddings to form extended condition vector

LongCLIP [2]

- Extends CLIP’s positional embeddings via interpolation (77→248 tokens)

- Fine-tunes the extended model on long-text retrieval tasks

- Maintains CLIP’s architecture while extending sequence length capacity

2.2 Alternative Encoding Strategies

LLM-based Summarization

- Employs GPT-4 or Llama3 to compress long descriptions

- Maintains semantic content within 77-token limit

- Trade-off between compression and detail preservation

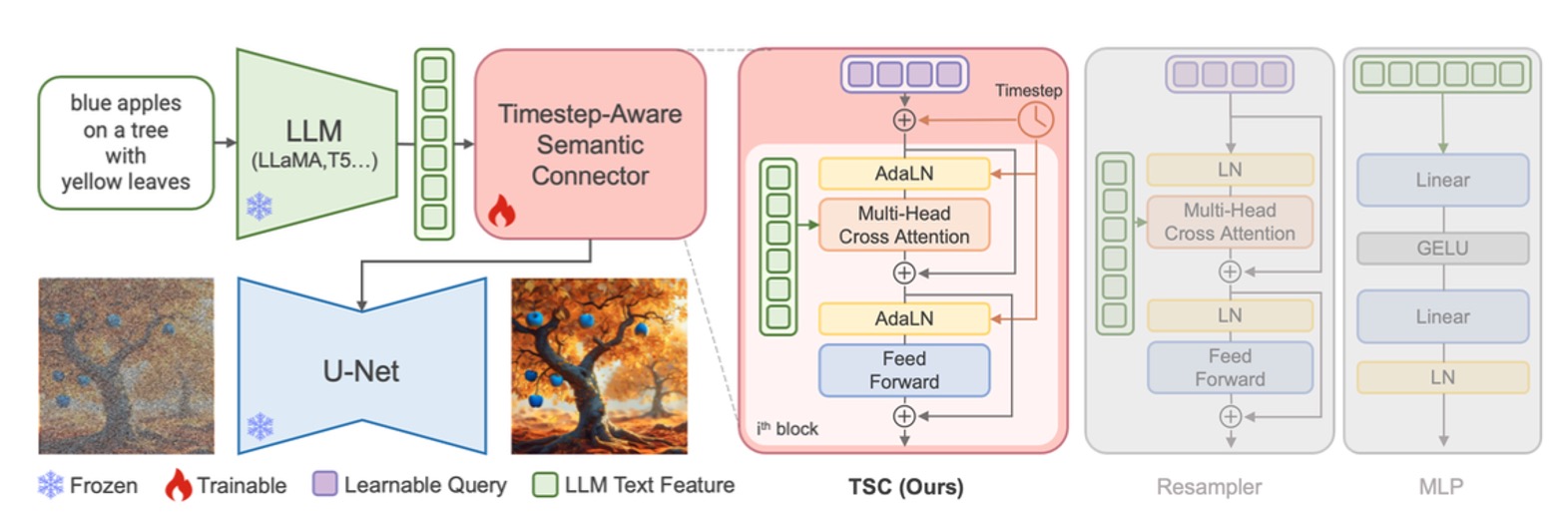

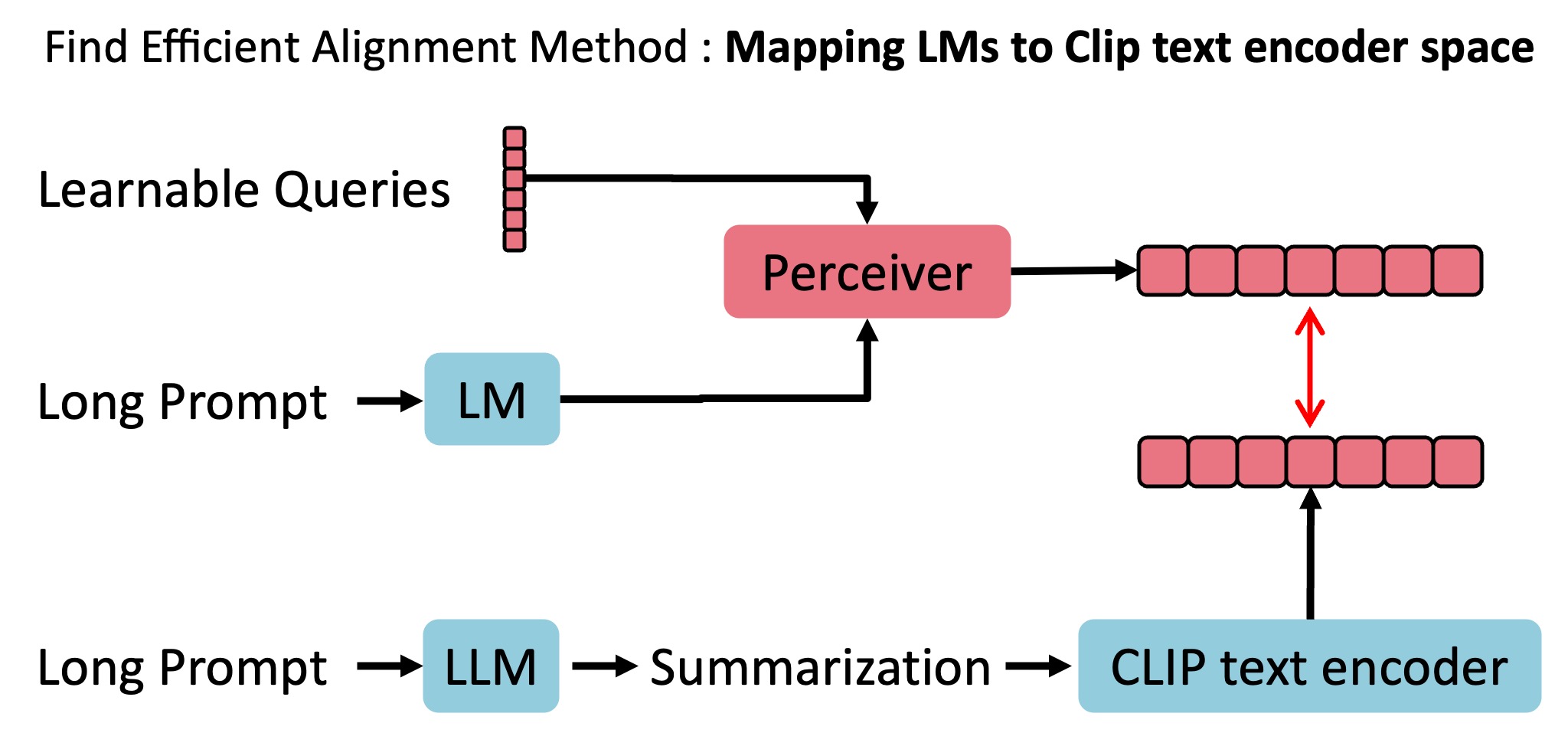

ELLA Framework [3]

- Replaces CLIP with Large Language Models (T5-XXL)

- Introduces Timestep-Aware Semantic Connector (perceiver module)

- Learns end-to-end mapping from LLM features to diffusion condition space

- Training scale: 30M images, 56 GPU-days

The ELLA framework uses a Timestep-Aware Semantic Connector (perceiver module) to bridge LLM and diffusion model. The perceiver module employs learnable queries to compress variable-length LLM outputs into fixed-size representations compatible with the frozen diffusion U-Net.

3. Experimental Methodology

3.1 Dataset

Docci Dataset [7] (Google, 2024)

- 15K human-annotated image descriptions

- Average length: 150 tokens

- Validated as superior to GPT-4 generated captions via 5-point Likert scale

3.2 Evaluation Framework

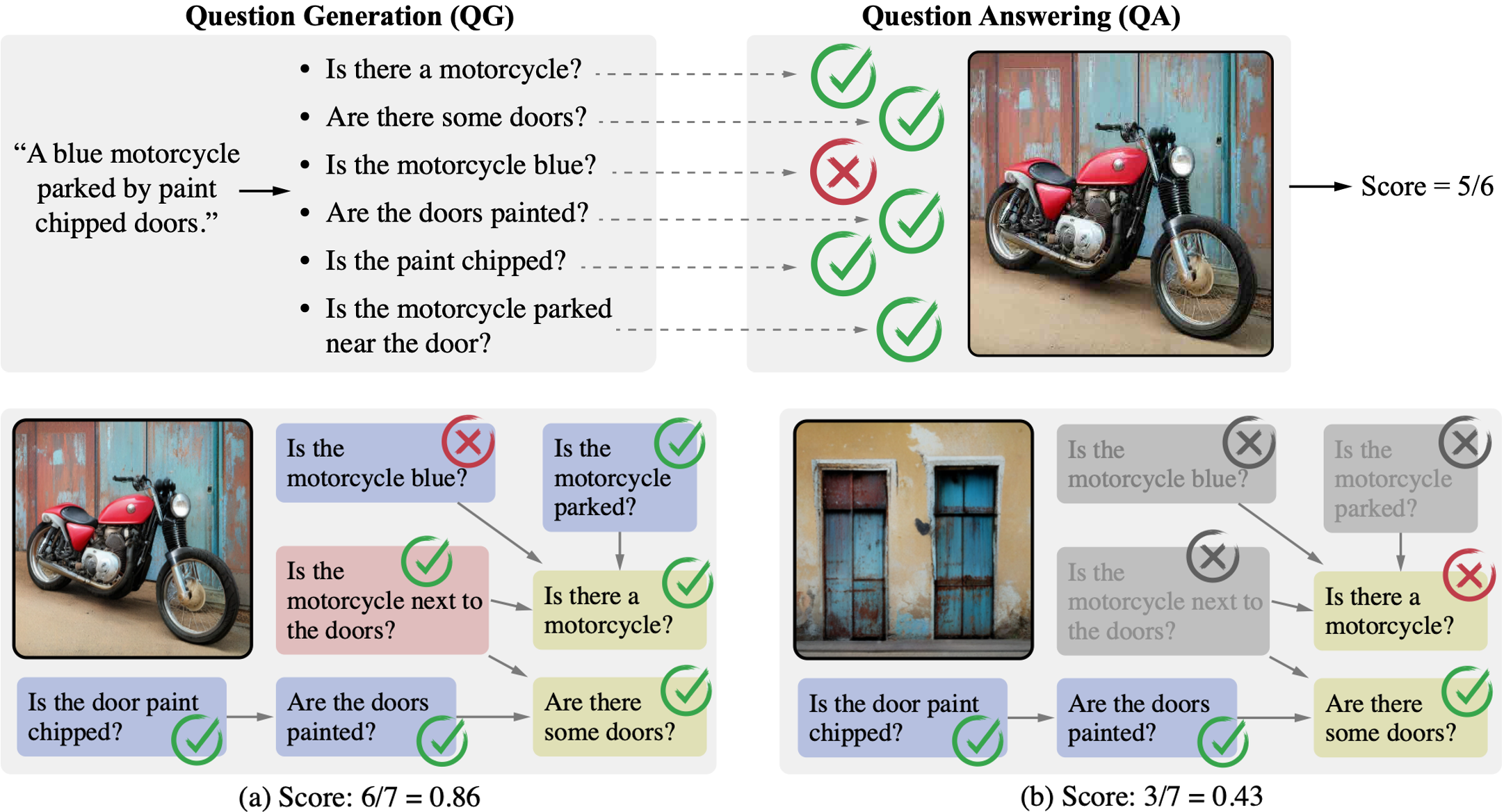

TIFA [4] with DSG Enhancement [5]

- Extended TIFA for long text evaluation

- DSG categories: action, attribute, entity, global, relation, spatial

- VQA Model: mPLUG-large [6] (publicly available alternative to PALI-17B)

The framework integrates TIFA with Davidsonian Scene Graph (DSG) enhancement, generating question-answer pairs from scene graphs and evaluating generated images using VQA models.

3.3 Experimental Setup

- Primary experiments: Stable Diffusion 1.5 (all quantitative results and main analysis)

- Comparative analysis: Stable Diffusion 2.1 (validation of findings)

- Sampling: DDIM with 50 steps

- Baseline: Oracle (original Docci descriptions)

4. Results and Analysis

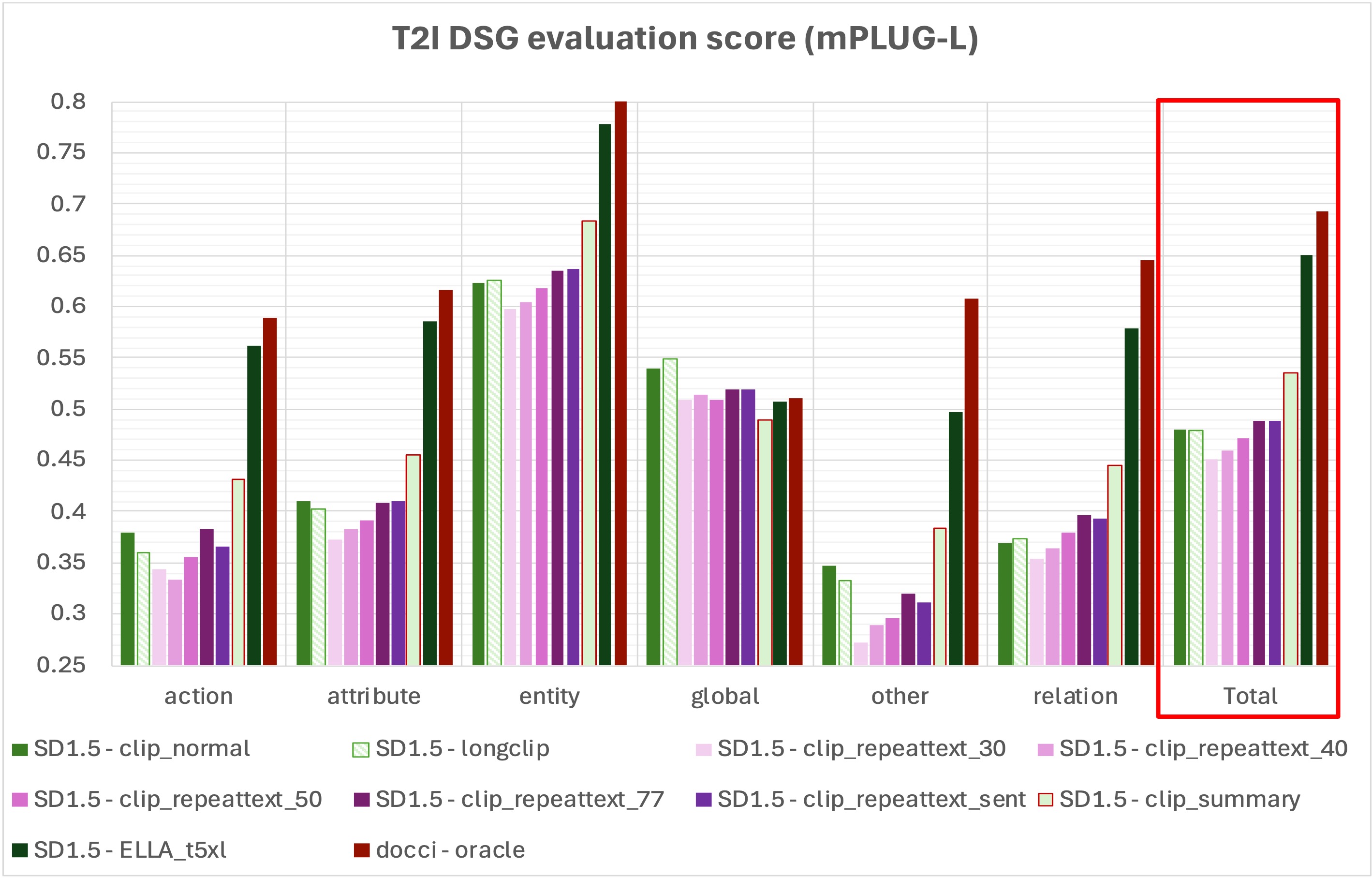

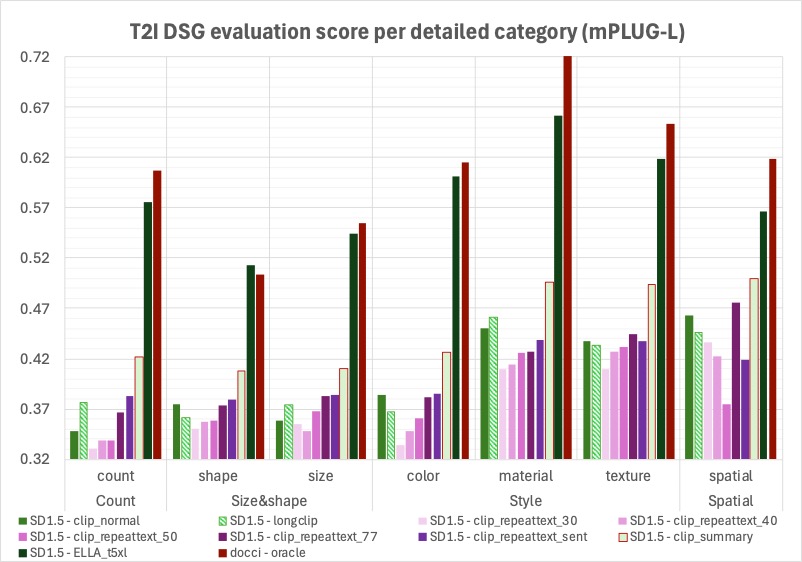

4.1 Overall Performance on SD1.5

Key Results:

- ELLA: 0.66 average DSG score

- Oracle (upper bound): 0.72

- LongCLIP: 0.48 (marginal improvement over standard CLIP at 0.45)

- Concatenation methods show progressive degradation:

- 77-token chunks: 0.50

- 50-token chunks: 0.48

- 40-token chunks: 0.45

- 30-token chunks: 0.43

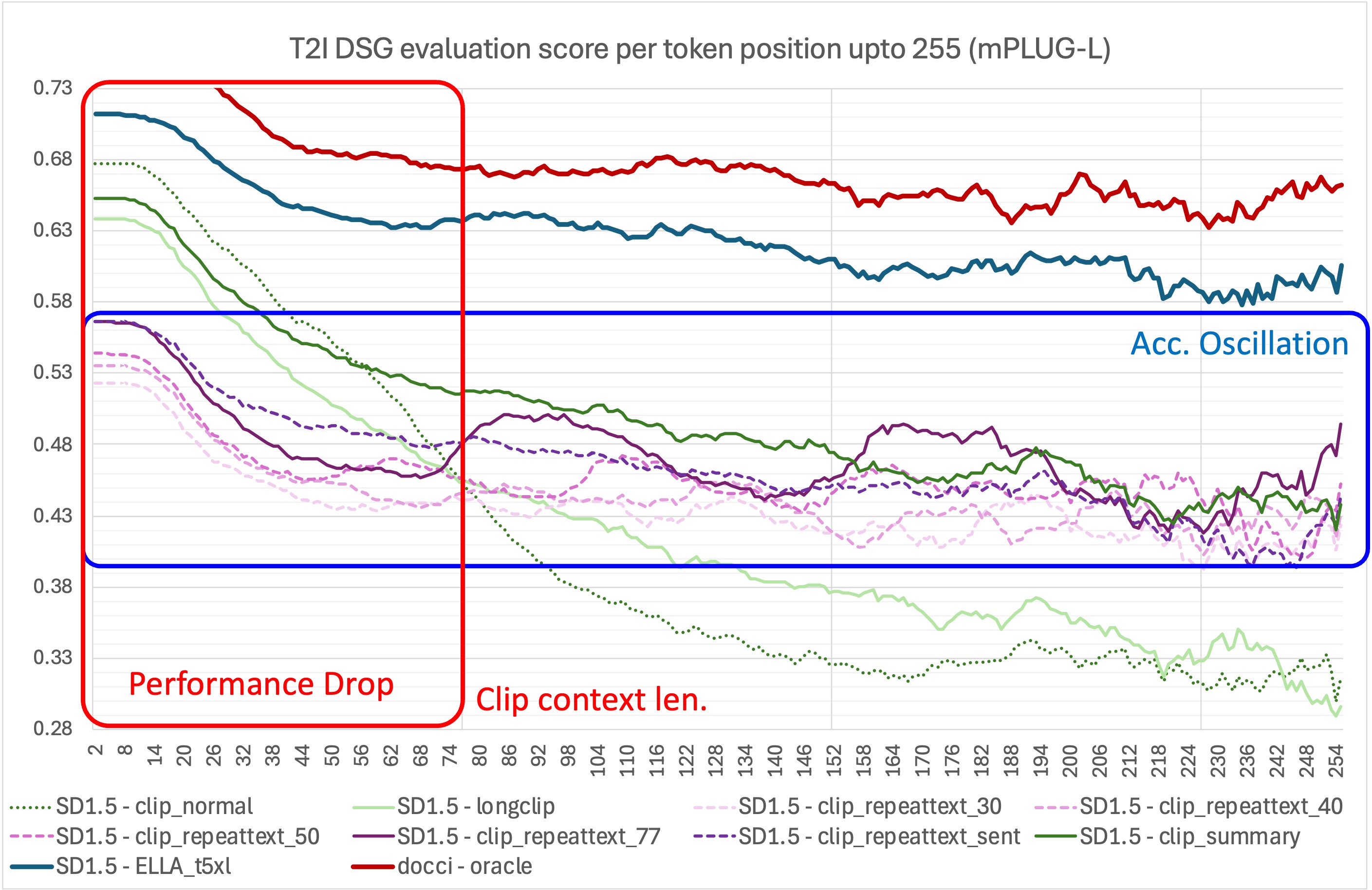

4.2 Token Position Analysis

4.2.1 Stable Diffusion 1.5 Results

The X-axis represents the question’s target content position in test prompt, Y-axis shows average text alignment score. Key observations:

- Sharp performance drop after position 30

- Periodic oscillations every 77 tokens in concatenation methods

- Oracle maintains relatively stable performance

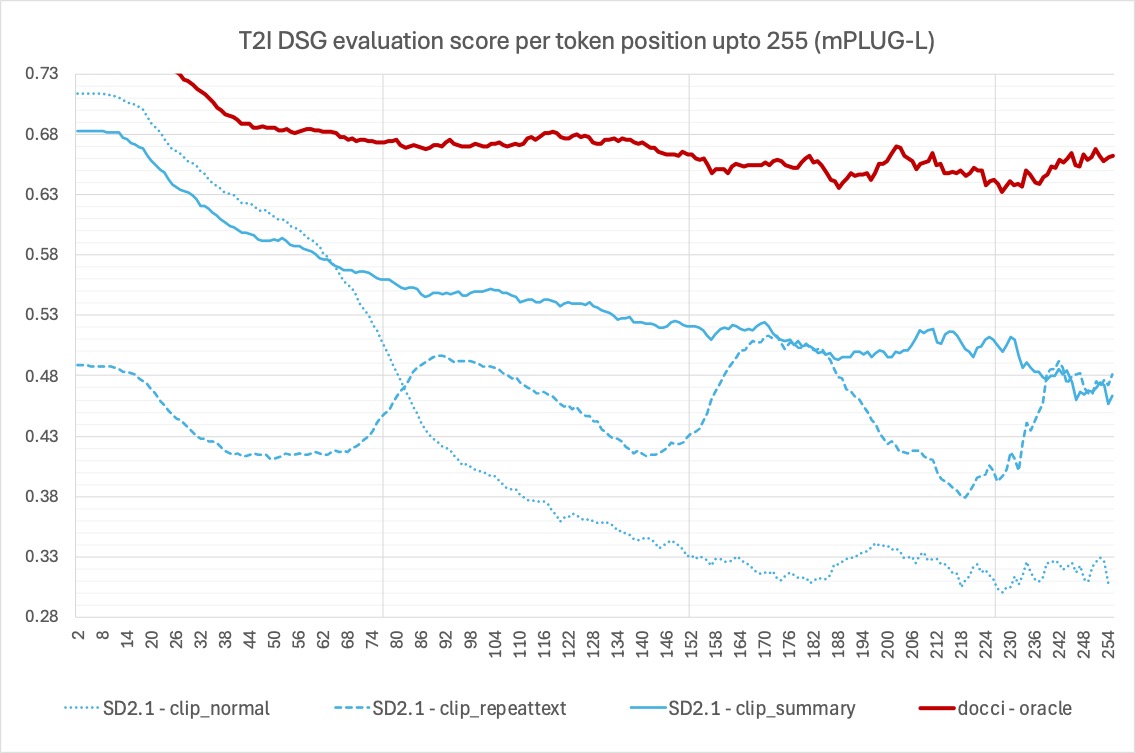

4.2.2 Stable Diffusion 2.1 Validation

SD2.1 shows identical patterns to SD1.5, confirming that these limitations are inherent to CLIP-based architectures rather than model-specific.

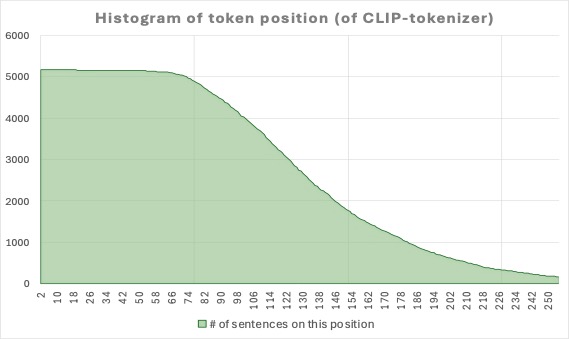

4.3 Dataset Distribution

The dataset provides comprehensive coverage from position 2 to 250+, with peak density around positions 10-50, ensuring robust evaluation across various prompt lengths.

4.4 Category-Specific Performance

ELLA consistently outperforms all methods across categories:

- Entity recognition: ELLA (0.66) vs concatenation (0.45)

- Spatial relationships: ELLA (0.62) vs concatenation (0.37)

- Material attributes: ELLA (0.66) vs concatenation (0.42)

- Count accuracy: Challenging for all methods (ELLA: 0.37, others: <0.35)

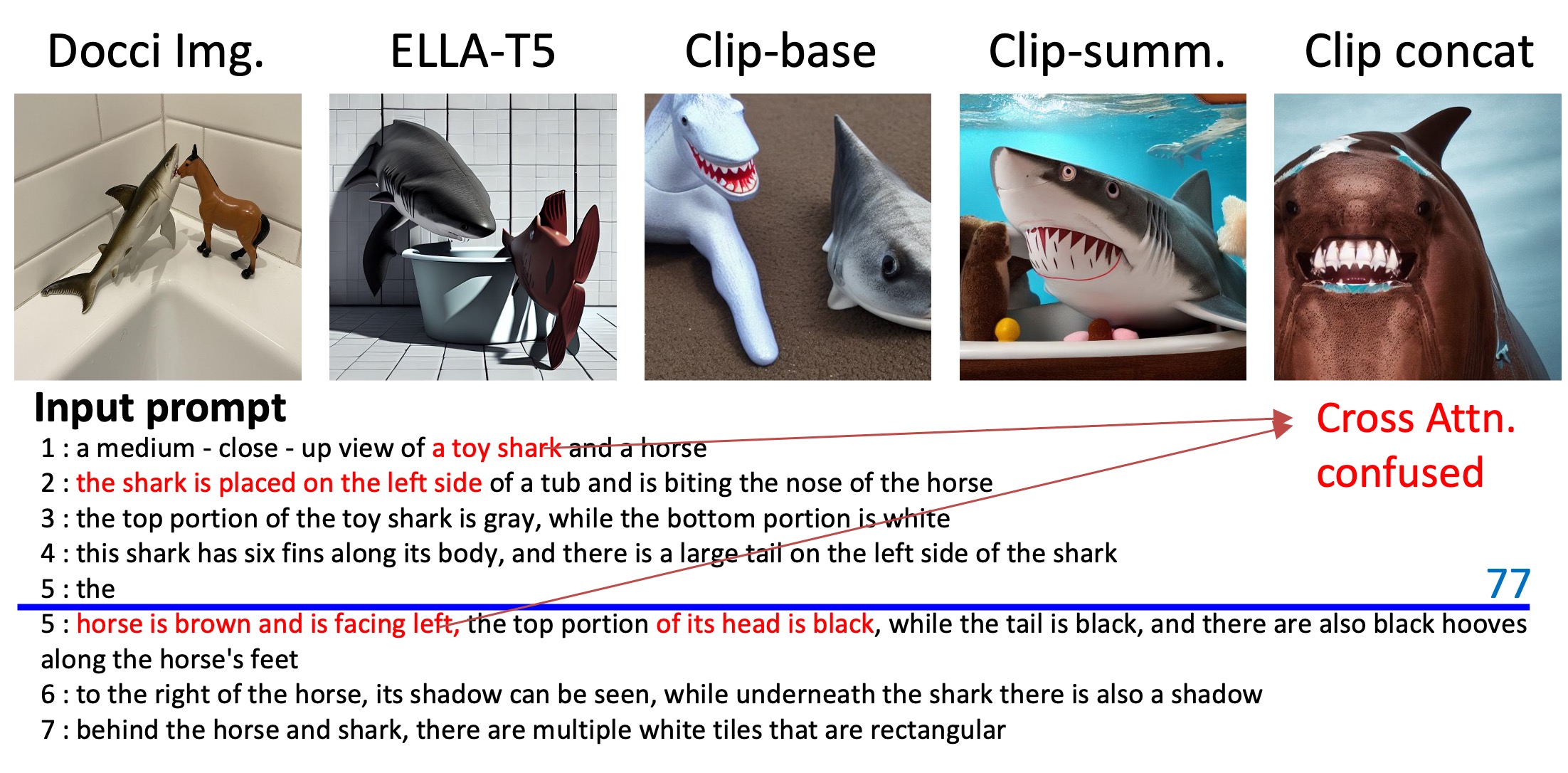

4.5 Qualitative Analysis

Analysis on a 7-sentence prompt describing a toy shark and horse scene. Lines 1-4 (within the first 77 tokens) are handled reasonably well by most methods, but content after line 5 (beyond token 77) causes cross-attention confusion in CLIP-based methods. Only ELLA-T5 successfully generates all elements including the horse (line 6) and maintains spatial relationships.

For detailed experimental logs and additional analyses across 15K test samples:

View Full W&B Report5. Discussion

5.1 Dual Bottleneck in Context Length Limitation

Our experimental results reveal that context length limitation manifests as problems in two critical components:

5.1.1 Text Encoder’s Limited Capability

CLIP shows limited effective encoding even within its 77-token capacity:

- LongCLIP’s gradual degradation despite 248-token extension reveals architectural, not positional, limitations

- Concatenation methods show flat performance with 77-token periodic oscillations

- According to LongCLIP [2], CLIP’s effective encoding length is approximately 20 tokens. Our experimental results confirm this limitation, showing a sharp performance drop after position 30. This discrepancy (20 vs 30) may be due to different evaluation metrics, but both findings consistently demonstrate that CLIP’s effective capacity is far below its 77-token maximum.

5.1.2 U-Net’s Cross-Attention Limitations

Cross-attention struggles with extended condition vectors:

- Concatenated conditions underperform standard CLIP despite more information

- 77-token periodic drops indicate inability to process extended sequences

- Attention mechanism fails to maintain image-text correspondence with length

5.2 Implications for Long-Text Generation

These dual bottlenecks explain why simple architectural extensions fail:

- Extending token limits (LongCLIP) doesn’t address the fundamental encoding inefficiency

- Concatenation approaches are limited by both encoding quality and attention mechanism capacity

- End-to-end learning (ELLA) succeeds because it jointly optimizes compression and alignment

6. Conclusion

6.1 Key Finding

ELLA’s perceiver module architecture [3] achieves superior performance through end-to-end learning, successfully addressing both text encoding limitations and cross-attention constraints inherent in CLIP-based architectures.

6.2 Technical Insights

- Context length limitation stems from dual bottlenecks: CLIP’s limited effective length (~20 tokens) and U-Net’s attention mechanism constraints

- Simple extensions (positional embedding, concatenation) cannot overcome these fundamental architectural limitations

- End-to-end learning with dedicated compression modules (ELLA) provides the most effective solution

7. Future Work

7.1 Primary Challenge

Despite superior performance, ELLA’s computational requirements (56 GPU days on A100) remain prohibitive for most research settings.

7.2 Research Directions

Efficient Training Strategies:

- Knowledge distillation from pre-trained ELLA models

- Parameter-efficient fine-tuning (LoRA, adapter modules)

- Progressive training schedules with curriculum learning

Proposed Multi-Concept Decomposition:

- Hierarchical prompt processing with concept dependencies

- Parallel U-Net streams for independent concepts

- Reduced computational overhead through modular processing

The architecture uses LLM to extract concept dependencies, perceiver module for CLIP space mapping, and parallel processing streams for efficient computation.

7.3 Recommendations

- Develop lightweight perceiver architectures with comparable performance

- Investigate hybrid approaches combining efficient compression with minimal fine-tuning

- Explore modular training strategies enabling incremental capability expansion

References

[1] Radford, A., et al., “Learning Transferable Visual Models From Natural Language Supervision”, ICML’21

[2] Zhang, B., et al., “Long-CLIP: Unlocking the Long-Text Capability of CLIP”, ECCV’24

[3] Hu, X., et al., “ELLA: Equip Diffusion Models with LLM for Enhanced Semantic Alignment”, ECCV’24

[4] Hu, Y., et al., “TIFA: Accurate and Interpretable Text-to-Image Faithfulness Evaluation with Question Answering”, ICCV’23

[5] Cho, J., et al., “Davidsonian Scene Graph: Improving Reliability in Fine-grained Evaluation for Text-to-Image Generation”, ICLR’24

[6] Li, C., et al., “mPLUG: Effective and Efficient Vision-Language Learning by Cross-modal Skip-connections”, EMNLP’22

[7] Yasunaga, M., et al., “Docci: Descriptions of Connected and Contrasting Images”, arXiv:2404.19753, 2024